Majeed Kazemitabaar

PhD Candidate

Department of Computer Science

University of Toronto

# About Me

I'm Majeed, an incoming Assistant Professor at the University of Alberta's Department of Computing Science and a fellow at the Alberta Machine Intelligence Institute (Amii), starting in January 2026. My research in Human-Computer Interaction focuses on addressing fundamental challenges surrounding interaction and cognition within the evolving landscape of programming with generative AI.

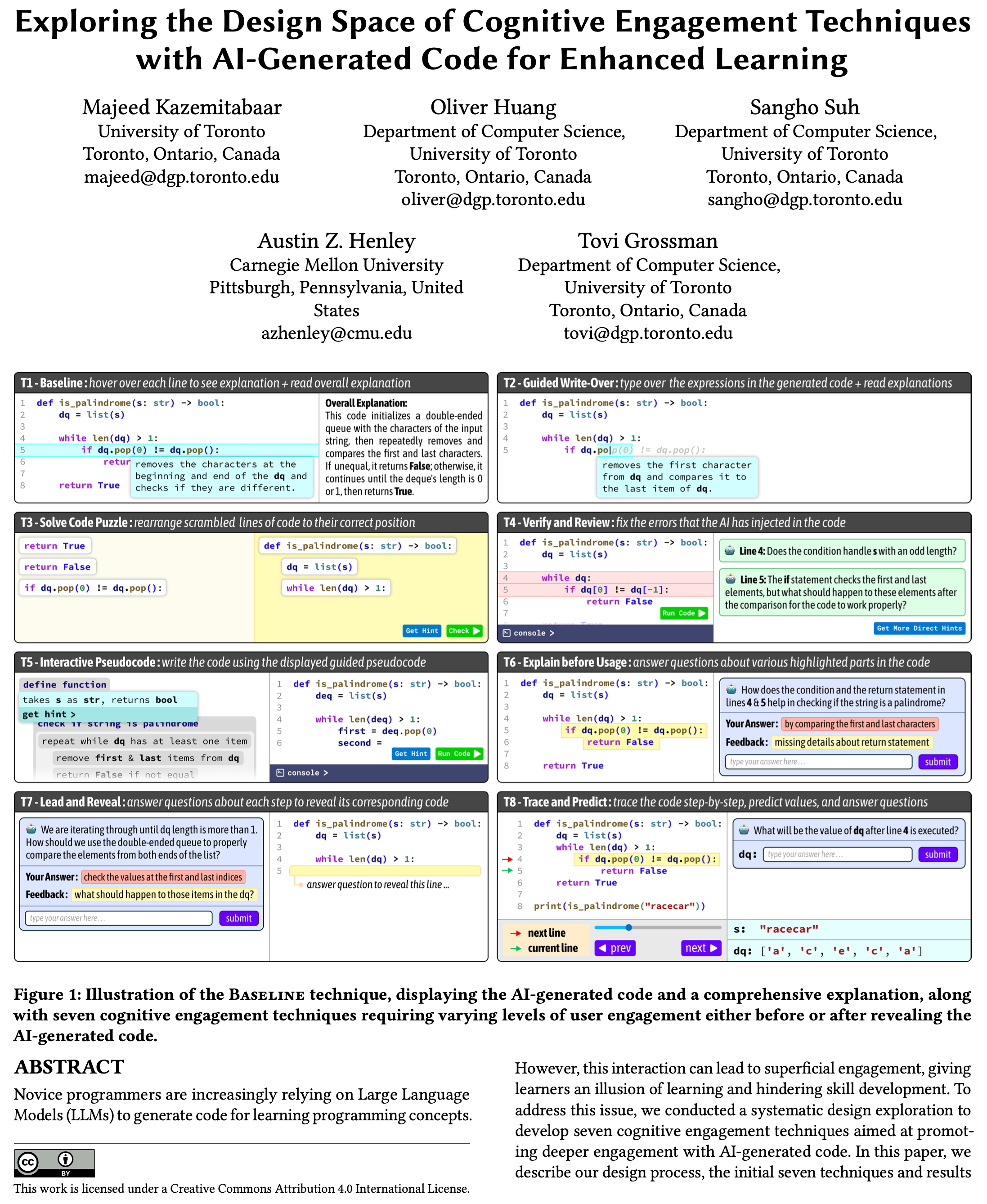

My work includes (a) studying the implications of AI on over-reliance when learning to code [C.6] and [C.7], (b) designing novel interfaces and interventions that cognitively engage programmers with AI-generated solutions [C.10], (c) developing human-AI interactions that involve users in editing the AI's chain-of-thought reasoning [C.9], and (d) deploying pedagogical AI assistants that promote independent problem-solving [C.8].

# Latest Updates

- SEP 2025Co-founded LearnAid, an AI teaching assistant, and deployed it at the University of Toronto in three courses with 4,000 students.

- APR 2025Co-organized CHI 2025 workshop on "AI tools that augment human cognition" [EA.3].

- JAN '25Our $300,000 LEAF Grant to develop the next generation of CodeAid [C.8] has been approved!

# Full Conference Papers

[C.10] Exploring the Design Space of Cognitive Engagement Techniques with AI-Generated Code for Enhanced Learning

IUI 2025 • ACM Conference on Intelligent User Interfaces

[C.9] Improving Steering and Verification in AI-Assisted Data Analysis with Interactive Task Decomposition

UIST 2024 • ACM Symposium on User Interface Software Technology

[C.8] CodeAid: Evaluating a Classroom Deployment of an LLM-based Programming Assistant that Balances Student and Educator Needs

CHI 2024 • ACM Conference on Human Factors in Computing Systems

[C.7] How Novices Use LLM-Based Code Generators to Solve CS1 Coding Tasks in a Self-Paced Learning Environment

Koli Calling 2023 • ACM Koli Calling Conference on Computing Education Research

[C.6] Studying the effect of AI Code Generators on Supporting Novice Learners in Introductory Programming

CHI 2023 • ACM Conference on Human Factors in Computing Systems

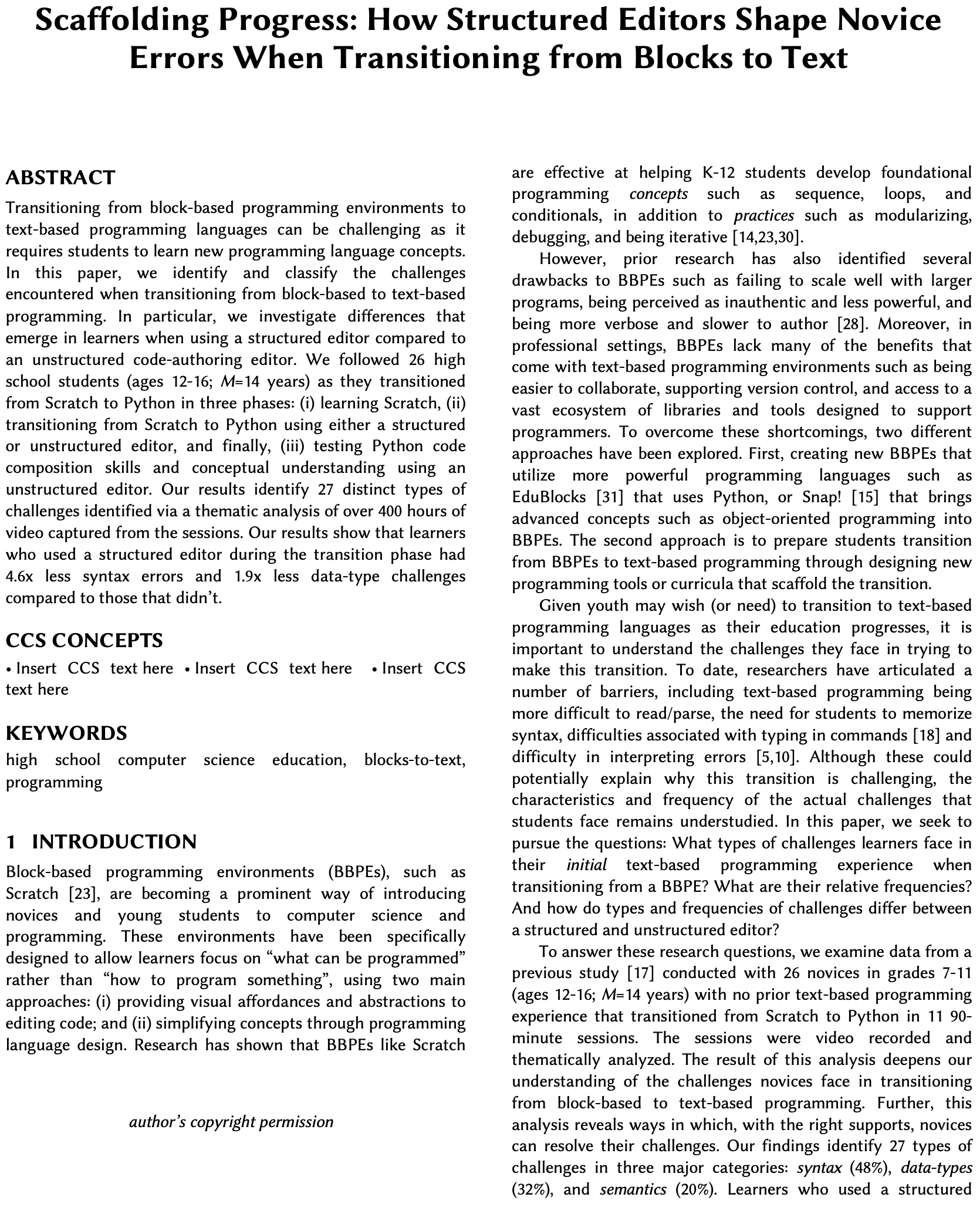

[C.5] Scaffolding Progress: How Structured Editors Shape Novice Errors When Transitioning from Blocks to Text

SIGCSE 2023 • ACM Technical Symposium on Computer Science Education

[C.4] CodeStruct: Design and Evaluation of an Intermediary Programming Environment for Novices to Transition from Scratch to Python

IDC 2022 • ACM Conference on Interaction Design and Children

[C.3] Bifröst: Visualizing and Checking Behavior of Embedded Systems across Hardware and Software

UIST 2017 • ACM Symposium on User Interface Software Technology

[C.2] MakerWear: A Tangible Approach to Interactive Wearable Creation for Children

CHI 2017 • ACM Conference on Human Factors in Computing Systems

Best Paper Award

[C.1] Activities Performed by Programmers while Using Framework Examples as a Guide

SAC 2014 • ACM Symposium of Applied Computing

# Extended Abstracts

[EA.3] Tools for Thought: Research and Design for Understanding, Protecting, and Augmenting Human Cognition with Generative AI

CHI 2025 Workshop • (Accepted) ACM Conference on Human Factors in Computing Systems

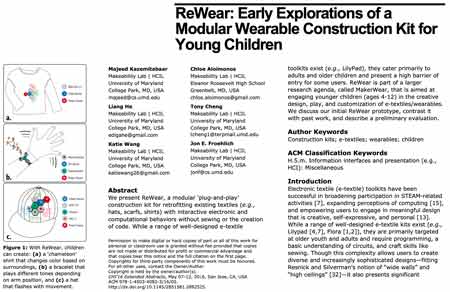

[EA.2] ReWear: Early Explorations of a Modular Wearable Construction Kit for Young Children

CHI 2016 Late-Breaking Work • ACM Conference on Human Factors in Computing Systems

Best Late-Breaking Work Award

[EA.1] MakerShoe: Towards a Wearable E-Textile Construction Kit to Support Creativity, Playful Making, and Self-Expression

IDC 2015 Demo • ACM Conference on Interaction Design and Children

# Theses

Balancing Productivity and Cognitive Engagement in AI-Assisted Programming

PhD Thesis • University of Toronto • Advisor: Tovi Grossman

MakerWear: A Tangible Construction Kit for Young Children to Create Interactive Wearables

MSc Thesis • University of Maryland, College Park • Advisor: Jon E. Froehlich

# Advising

Sepehr Hosseini • 2024 • MSc Computer Science • University of Toronto

Lead the design, development, and evaluation of an AI-powered semantic mini-map to enhance navigation in computational notebooks.

Oliver Huang • 2023 - 2024 • BSc Computer Science • University of Toronto

Design and implementation of cognitive engagement techniques with AI-generated code.

Research Papers [C.10]

Chase McDougall • 2023 - 2024 • BSc Engineering Sciences • University of Toronto

EngSci Thesis Supervisor "Personalized Gamification to Increase Student Engagement in Self-Paced Introductory Programming."

Harry Ye • 2023 • BSc Computer Science • University of Toronto

Qualitative analysis of student queries from CodeAid and survey responses.

Research Papers [C.8]

Justin Chow • 2022 • BSc Engineering Sciences • University of Toronto

Conducting user studies to compare learning to code with and without AI code generators.

Research Papers [C.6]

Carl Ka To Ma • 2022 • BSc Engineering Sciences • University of Toronto

Conducting user studies to compare learning to code with and without AI code generators.

Research Papers [C.6]

Viktar Chyhir • 2021 - 2022 • BSc Computer Science • University of Toronto

Design and development of CodeStruct and conducting user studies.

Research Papers [C.4][C.5]

Alex Jiao • 2016 • BSc Electrical Engineering • University of Maryland

Development of MakerWear and conducting user studies.

Research Papers [C.2]

Jason McPeak • 2016 • BSc Computer Engineering • University of Maryland

Design and development of MakerWear and conducting user studies.

Research Papers [C.2]

Katie Wang • 2015 • BSc Computer Science • University of Maryland

Design and development of ReWear and conducting user studies.

Research Papers [EA.2]

Tony Cheng • 2015 • BSc Computer Science • University of Maryland

Design and development of ReWear and conducting user studies.

Research Papers [EA.2]

Chloe Aloimonos • 2015 • High School Student

Design and development of ReWear and conducting user studies.

Research Papers [EA.2]